Crafting a Solid First Prompt

In early 2024, I ran an AI-enablement workshop with iFeel, a company that provides online mental health and wellbeing support for employees, to help them understand how tools like ChatGPT can be utilised at work.

My approach was to review all the official sources of information on prompt engineering at the time (OpenAI's guidelines, Anthropics', free courses, some paid ones). I then aggregated each claim or piece of advice from these sources and converted them into a yes/no question. Things like:

- Have you described and instructed GPT on how to handle any difficult or unusual cases it may encounter in your input?

- Where possible, have you remembered to add at least three examples to your prompt?

- Have you asked GPT to evaluate its own outputs?

- Have you instructed GPT on what to do if it doesn't find the required information in a given text (avoiding hallucinations)?

- Have you checked if GPT has understood your instructions by asking it?

If memory serves, I managed to put over 150 of these types of questions together. I then did my best to group them and give them some kind of relative weight (based entirely on my personal experience and anecdotes I found online).

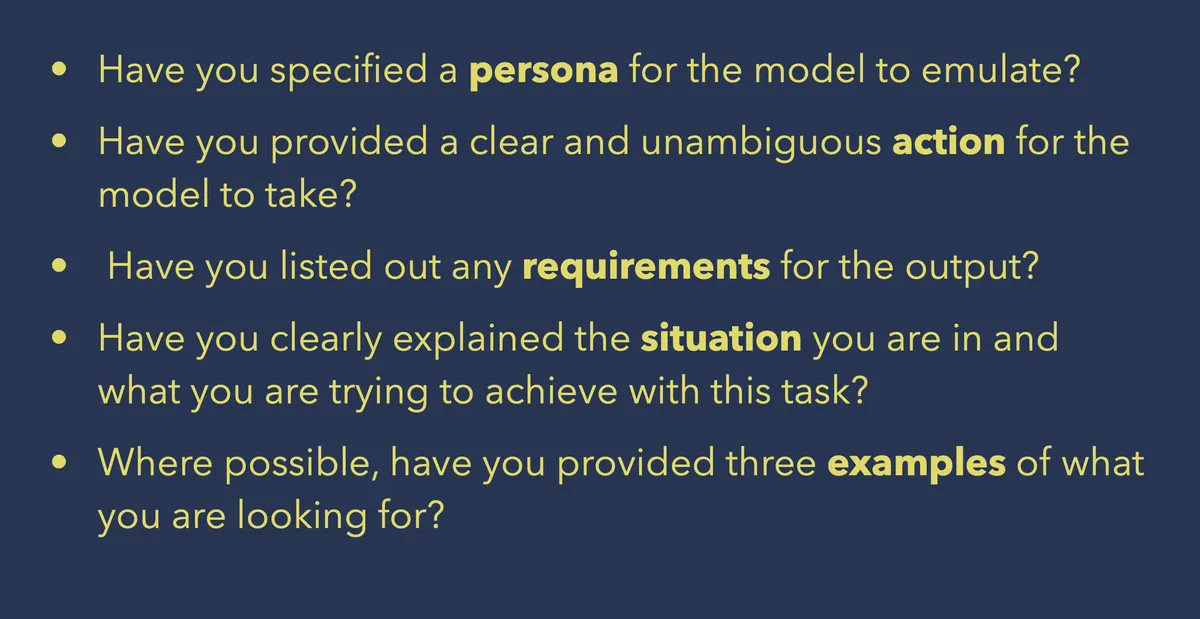

The final result was 5 questions, captured on the slide below, that I believed offered the most bang for your buck.

I wrote a more comprehensive cheatsheet-style blog post about these 5 questions, along with a bunch of examples here.

Looking back at all of this over a year later, the core principles I surfaced held up pretty well. If I were eyeballing a prompt today, some of the language I use has changed slightly, but I'd say the following components make for a decent prompt:

- Provide a role and an objective: “You are a ___ and you will do ___.”

- Specification: Clear, specific and unambiguous guidelines on what to do and what not to do.

- Relevant context: Pull in claim, fact, or specific instructions needed to complete the task.

- Few-shot examples (when useful): give example recipes to ground formatting and expectations. Examples help LLMs as they help humans.

- Any reasoning or chain-of-thought steps: Although I do believe this is becoming less necessary as most models now have this baked in, for older models, you might include directives like “think step-by-step” before the final answer.

- Formatting constraints: JSON, YAML, XML—anything you’ll parse programmatically. Major API providers also support specifying schema/structured outputs (e.g., JSON mode, tool calling). Use formatting constraints when you need structured output.

This won't produce the perfect prompt—but it’s a good starting point.

When you are building your first set of evaluations, a solid starting prompt is important. If your initial prompt is bad, your error analysis will be futile because you’ll end up chasing obvious specification gaps (e.g., you wanted JSON but didn’t ask for it). A good prompt up front means time spent on error analysis focuses on real application failures.

When defining what’s good and bad, it's usually easier to start with potential sources of user unhappiness. By brainstorming what people don't want and listing out unhappy paths, you get a practical definition of “bad”; “good” then becomes the absence of those bad behaviors.

The bulk of the work at this initial stage is elaborating on the "Specification" section of the prompt in as much detail as humanly possible. This corresponds to the "requirements" question in my workshop from last year. Here's what I had to say about this section at the time, and I wouldn't change a word.

"GPTs can’t read your mind. If outputs are too long, ask for brief replies. If outputs are too simple, ask for expert-level writing. If you dislike the format, demonstrate the format you’d like to see. The less GPTs have to guess what you want, the more likely you’ll get it.” This is the opening paragraph from the first of OpenAI’s Six strategies for getting better results from GPT. If you are not sure what to specify, then start with the type of output, its length, format, and style. The documentation also encourages you to list these requirements as bullet points and to phrase them in the affirmative. This is why they are called requirements and not constraints. “End every sentence with a question mark or full stop” is a clearer requirement than “Don’t use exclamation marks” because the latter has the phrase “use exclamation marks” embedded in it.

The more concrete and "implementable" each specification is, the easier it is to check for. We want less "is the bot being polite" and more "did the bot use one of these 12 greetings in the first 300 words of the conversation". Don't worry, we're not writing any evaluators at this stage, but a good way to know whether you're writing decent specifications is to think about whether there is some way to check for them.

We're really just talking about user preferences here. This is where we inject product taste— the more explicit we can get, the less AI has to guess, and the smaller the margin of error becomes.

The catch here is that you have to watch out for over-specification. As prompts get larger, context rot begins to set in, and they become less effective. If a prompt has 3 clear specifications, it will do a better job of following those specifications than if it has 300 specifications.

The balancing act here is to be as explicit as possible with your specifications, but then also limit yourself to only the most relevant specifications needed.

As reasoning models get smarter, some things don't need to be specified. Then again, every now and then, an advanced model will do something surprisingly silly that can be fixed with a simple specification.

Luckily, with evaluations, there is no guessing; you can systematically measure the impact of each specification and then decide if it's worth keeping or not.

I believe it's always better to write every specification possible and then remove them as needed. This way, you have them on file when models upgrade or product requirements inevitably change further down the road.